Generative AI Landscape

The Generative AI field is booming, with new commercial and open-source Large Language Models (LLMs) popping up monthly. At Intento, we stay on top of the latest tech to fuel our Enterprise Language Hub. Here’s our handy free guide. Enjoy!

March 2024 Update

In the latest surge of advancements within the Generative AI landscape, Google and OpenAI have notably pushed the boundaries with their respective model updates and releases.

Google has enriched its PaLM 2 model family, introducing text-unicorn@001, text-bison@002, chat-bison@002, and versions with extended 32k token context capabilities while also unveiling its new multimodal Gemini models, with Gemini 1.5 Pro spotlighting its prowess in language translation, even in low-resource languages.

OpenAI, not to be outdone, rolled out an updated GPT-4 Turbo, enhancing its token context to a massive 128k and fixing crucial bugs, alongside slashing input and output prices for its GPT-3.5 Turbo model and experimenting with memory features for ChatGPT.

Meanwhile, OpenAI’s text embedding and moderation models see significant improvements in efficiency and capability.

In parallel, the AI community welcomes open models from xAI and Databricks, with xAI’s Grok-1 and Databricks’ DBRX introducing competitive Mixture-of-Experts architectures, alongside AI21’s innovative Jamba model, which promises tripled throughput for long contexts.

These developments not only signify a robust competitive spirit amongst leading AI institutions but also hint at a future of more accessible, efficient, and versatile AI applications.

Generative AI Landscape Summary

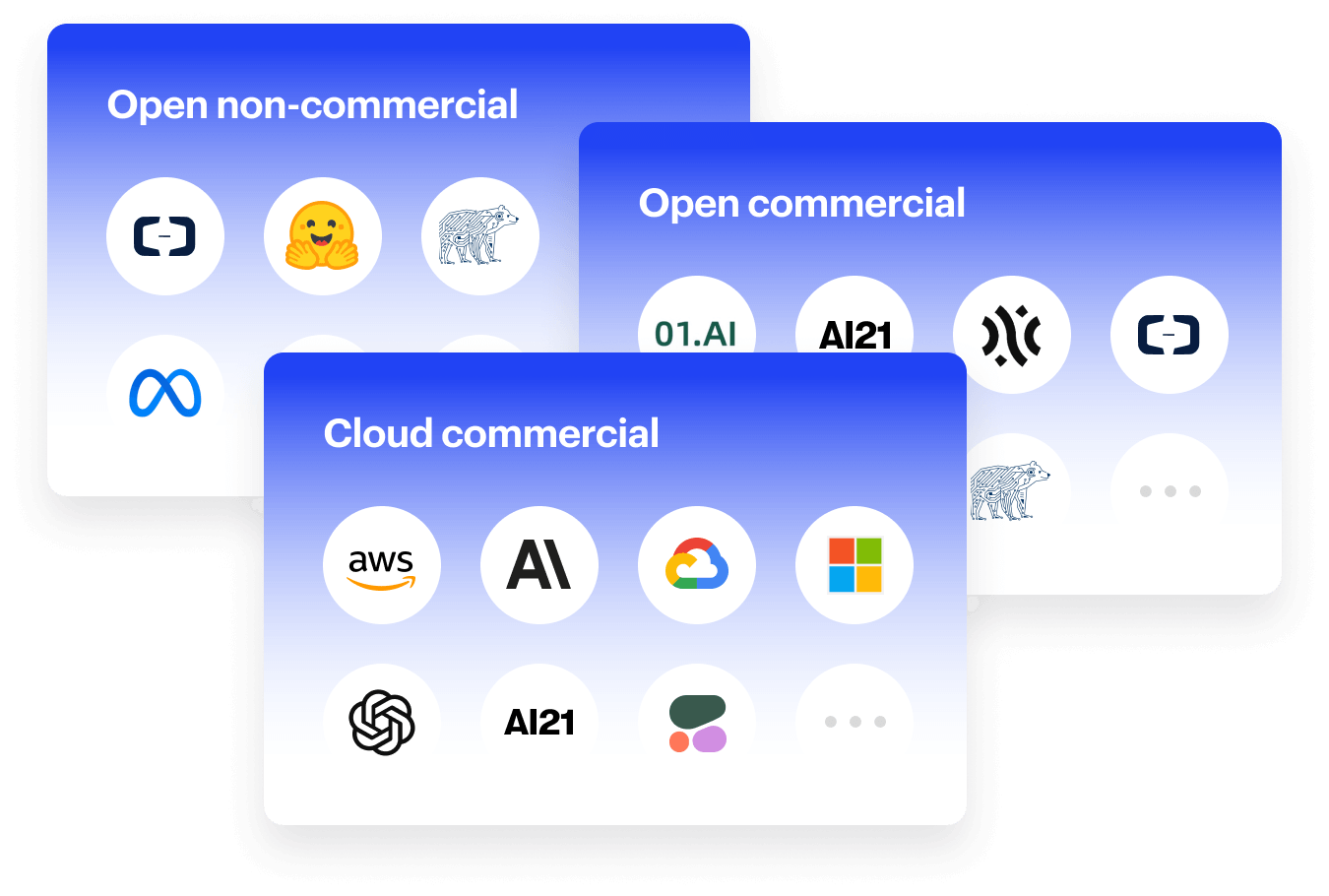

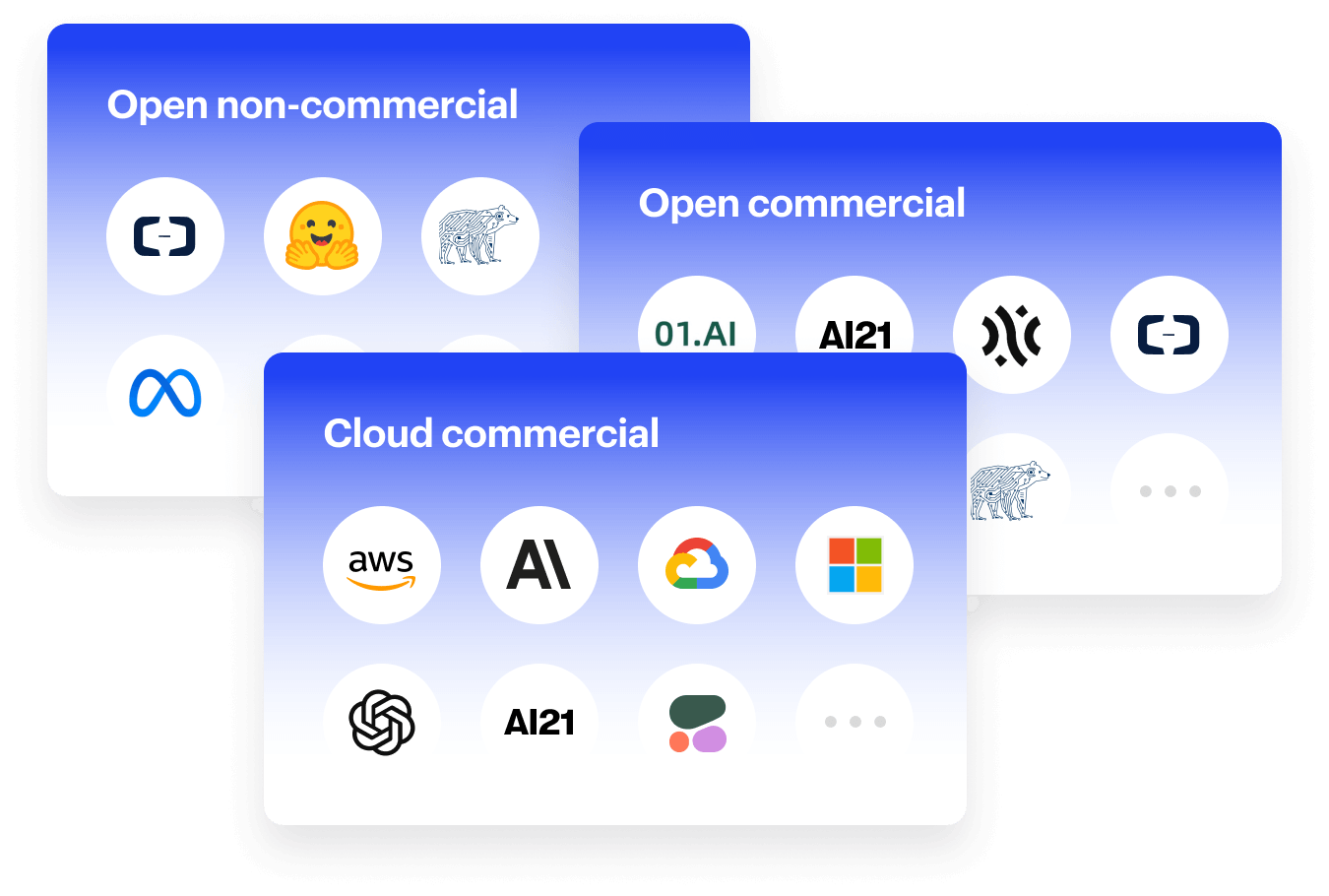

The Generative AI sector is rapidly expanding, necessitating a clear understanding of the Large Language Model (LLM) landscape. Our guide categorizes the latest LLMs, offering insights for both commercial and research pursuits. Additionally, for those focused on Commercial Cloud LLMs, we delve into how to pick the right GenAI for training, highlighting models customizable by fine-tuning. This overview simplifies navigating through the complexities of GenAI and LLMs, ensuring you’re well-informed to harness these technologies for your Enterprise Language Hub. Let’s explore the key categories:

Commercial Cloud LLMs

Commercial Cloud LLMs are enterprise solutions hosted on the cloud, which are crucial for AI-driven applications. These models provide scalability and various options for customization to business needs, making them versatile for various applications.

Commercial Open LLMs

Commercial Open LLMs blend the adaptability of open-source with commercial backing and licensing for business use, perfect for companies looking for innovation within a community-supported structure. These models offer the highest level of customization, assuming you have the necessary talent, funds, and data to set up and sustain them. Ensure you check the license for the latest model you’re using. Sometimes, providers change license terms between versions.

Non-commercial Open LLMs

Focused on academic and research endeavors, Non-commercial Open LLMs support innovation and exploration but either prohibit or restrict commercial usage. Typically, you must contact the model provider to negotiate a customary commercial license.

Model Adjustment Techniques for Commercial Cloud LLMs

Adjusting Commercial Cloud LLMs is vital for aligning them with specific business objectives. Our guide covers:

Baseline-only models

Baseline models provide a versatile framework for various tasks through prompt engineering, using one-shot and few-shot learning for customization, sometimes with additional parameters, such as temperature, context, or function calling. Typically, the most robust commercial cloud LLMs are initially available as baseline models, with customization options added later.

Customizable models

Some LLM providers let you customize LLMs for specific datasets or tasks, improving their performance and relevance to unique business challenges. By training the model on a custom dataset, businesses can achieve impressive accuracy and efficiency in tasks like sentiment analysis, personalized content creation, and more. This customization usually happens through fine-tuning, but some GenAI vendors offer more options: reinforcement learning from human feedback, distillation, grounding or retrieval-augmented generation, or offering a selection of pre-trained task-specific models.

Download the current GenerativeAI landscape

Solutions

Integrations

Services

Resources